(See also the Spanish version)

How likely would it be to win any prize having absolutely no clue about the questions? In the view of decision theoretic methods, would it be better to bet all to just one answer?

To solve these and other doubts that probably keep you awake all night I have created an online simulator and, for those of you with an interest for the Maths, you will also find below all the statistical foundations. Otherwise, you can skip straight to section 2 for some quick results.

1. The rules

In this TV show contestants start with 40 bundles of £50 notes, and the game consists in splitting them among the different options offered in a series of eight questions. Four possible answers are offered in the first four questions, three for the next three questions and only two choices exist for the final question.

The main rule is that one answer (at least) must remain without any bet on it. For example, in the first four questions one could split the bundles among a maximum of three answers. The money remaining after the last question becomes the prize of the contestants, who attend excited thinking of future purchases such as new PS3s… 😉

2. For the impatient readers: some quick results

- If you have absolutely no idea on which one is the right answer and…

- … you always bet all your bundles randomly to only one answer, you might pass the game with a probability as low as 0.0046%. In that extremely unlikely event you would leave with the maximum prize! Still, we must be realistic: there are 99.61% chances that you would never make it to the fifth question.

- … you always evenly divide your bundles among the maximum number of answers, discarding one of them (because those are the rules!) at random. Then you could have a 12.39% chances of making to the fifth question, but leaving the program with your hands full would still only occur with a 0.285%. In particular, a 0.2765% of leaving with just one bundle. Fair enough, given your absolute ignorance on the questions!

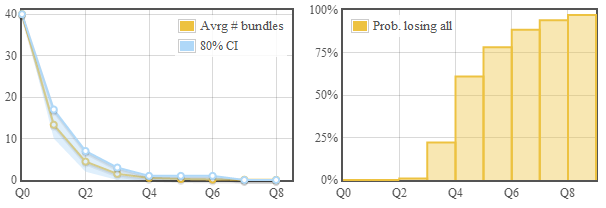

- Another more common scenario is having the possibility of confidently discarding one question, either because it is an absurd answer or because you really know for sure it is wrong. Assuming such a perfect discarding at all stages but the last one (the eighth question can be assumed to be harder) and that bundles are uniformly distributed among the rest of answers, we end up with a great 3% of winning something!… but with a 2.91% it would be only one bundle. Game will be in this case a bit more interesting, since there are 31.96% chances of succeeding at the fourth question. See the full results for this case in the next graphs (taken from the simulator below).

- One final situation to illustrate the decimation caused by the first four questions: assuming a 100% correct answer to these first fours and a blind random play in the following, we would have a 15% of passing the eighth question. Compare it to the mere 0.0046% of playing blind from the beginning. Furthermore, the most likely situation if you win something is leaving with 5 bundles, occurring with a 2.82%.

3. Simulator

Below you can find a calculator for this game. This is my first JavaScript program even, and despite I liked the experience, I can’t promise it would work on every Browser!

I would recommend to start playing with the sliders of the probabilities for correct discards. Recall: a discard means that nothing will be bet to one or more of the answers. You can also play with the number of those discards (e.g. discard=3 in the first question means that all goes to only one answer).

As you move the sliders, observe how the histograms (probability mass functions, strictly speaking) vary at the bottom. If you are curious on how all these computations take place, continue reading below.

4. The Maths

Firstly we must define the state space, that is, which variables will be used to model our problem.

We have nine stages: an initial one (0) and one stage after each of the eight questions (1-8). The game state at each stage can be perfectly summarized with one single integer number: the amount of bundles still in the game.

We will call (K_i) to this number of bundles for the i’th stage, with i=0…8. We know for sure that we start with 40 bundles, so (K_0=40). For the rest of stages we will need assistance from statistics, since we must somehow model our uncertainty on how events could develop during the game.

A probability mass function (pmf) is a function telling us the likelihood of every single different possible state in our problem. We denote pmf’s with a capital P. Its value is 0 for impossible events and 1 for unavoidable ones. For those of you unfamiliar with statistics, it’s more likely you heard of probabilities multiplied by 100, in which case we have probability given in % (percent).

At the beginning of the game, we know the pmf since it’s impossible to have 0,1,2,…39 bundles and we know for sure we will always have 40 of them. Thus we have a function with all zeroes but a one at 40:

[ P(N_0=k) = left{ begin{array}{l} 0 , k=0,…,39 \ 1, k=40 end{array} right. ]

Take a look now at the «Q0» histogram in the simulator above. It’s exactly this pmf!

Now, the cool stuff. What happens after the first question? Or, even better: what happens, in general, to the pmf between an stage i and the next stage i+1? Think about it; so many things could happen: we can divide the bundles in many different ways, lose any amount of them, etc.

In order to make the problem tractable we invoke the Law of Total Probability. It tells us that we can compute the pmf at stage i+1, (P(N_{i+1})) as:

[ P(N_{i+1}=k) = sum_{q=0…40} P(N_{i+1}=k | N_i = q ) P(N_i = q) ]

Or put in words: we must account for all the possible, independent pathways (that would be the sum) by which we could end up having k bundles, using the conditional probabilities (those like A|B) of previously having q of them and, of course, weighting each path by its probability, ( P(N_i = q) ).

The formula above has two wonderful properties: since we work on a discrete state space we can easily perform the exact sum (things become much harder for continuous variables) and, furthermore, it allows us to sequentially compute any state provided the knowledge of the previous one (and we indeed know the initial state!).

Those of you familiar with convolution might have noticed the similarity of this operation, given the aspect of the histograms in the simulator above: each histogram is «wider» than its precursor. However, a key difference with convolution here is that we can’t get below a limit (k=0), so probabilities tend to accumulate at that point.. and our poor contestant tends to leave empty-handed!

It only remains to be defined the probability transition function for the different stages, which appeared in the equation as the conditional distribution. Applying the law of total probability again we see that it is actually a mix of two other distributions, each pondered by the probability of correctly discarding (P=α) and failing in the discarding (P=1-α):

[ P(N_{i+1}=k | N_i = q ) = alpha P(N_{i+1}=k | N_i = q, GoodDiscard) +\ (1-alpha) P(N_{i+1}=k | N_i = q, BadDiscard) ]

In case of failing things are simple: if you fail discarding it means that nothing was bet against the correct answer, therefore at the next stage you will have (for sure) no bundles at all:

[ P(N_{i+1}=k | N_i = q, BadDiscard) = left{ begin{array}{l} 1, k=0 \ 0 , k=1,…,40end{array} right. ]

The emerging statistic is very well known: the Binomial distribution. Thus, we have:

[ P(N_{i+1}=k | N_i = q, GoodDiscard) = B(k; q,β) ]

For those interested in all the details, take a look at the source code (GNU GPL3).

I hope you enjoyed reading and learning on Statistics!